A/B Testing Starter Guide — Software Engineering Perspective

/ 9 min read

A/B Testing Starter Guide Intro

Hola! Rachel LE and I have been recently working on user engagement topics, which led to a deep understanding of A/B Testing. We’d like to share our experiences with individual engineers, analysts, and startups looking to increase 🚀 their revenues.

Rachel LE, a Senior BI Analyst with deep analytical skills, equally contributed to this article. Big shout-out!

Why A/B Testing?

A product with huge traffic and loyal users suddenly sees a decrease in conversions. Hypothetically changing a design or behavior seems like the solution, but there’s a chance users might dislike the new interface or behavior.

That’s exactly why we have A/B testing — a user experience research methodology consisting of a randomized experiment with two or more variants. You can compare multiple versions of a feature by testing users’ interactions with each variant.

Benefits of A/B Testing

In this era of internet of things, business development and optimization remarkably lies in the power of learning and adapting, in which A/B testing is essentially the core.

We use A/B test to analyze and understand how things perform against a controlled baseline. A decent years back, A/B tests were metaphorically referred as oil or fuel of the future, and more recently the comparison has been with sunlight because like solar rays, they will be everywhere and underlie everything. Big companies like Facebook, Airbnb or Agoda run thousands of A/B tests per day and developed multiple best-in-class features based on A/B test learning. Google famously tested 41 different shades of blue for a button to see which one got the best click through rate.

It’s risky to change something in the application that might badly affect the engagement meanwhile you can prove your hypothesis using A/B testing methodologies. Here’s why:

- Higher conversion rates: Test colors, micro-copy, CTAs, titles, or information structures to increase user engagement.

- Reduce bounce rates: Test UI and UX variations to keep users engaged longer.

- Low-risk modifications: Make small, targeted changes instead of large redesigns.

- Ease of analysis: A/B tests offer clear metrics for determining a winning variant.

- Reduce cart abandonment: Test cart display, text, and information to improve checkout completion rates.

Types of A/B Tests

- A/B Testing: Two variants of a feature (call-to-action button, page design, etc.) are shown to different group of users/visitors at the same time to determine which variant generates the maximum impact and drive business metrics.

- Multivariate Testing: With this type, multiple variations of multiple page features are simultaneously tested to examine which combination of variation perform the best out of all the possible permutations. It’s more complicated than a regular A/B test and is best suited for advanced marketing, product, and development professionals.

A simple formula to calculate the total number of versions in a multivariate test:

[# of variations for first element] x [# of variations for second element] = total number of versions to test- Split URL Testing: An entirely new version of an existing web page URL is tested to analyze which one performs better, as such the total traffic would be spilt into control group (original URLs) and test group (new URLs).

- Multipage Testing: Changes to particular variables across multiple pages so that users/visitors will be able to have consistent experience across their navigation on the website.

For more details on different types of A/B tests, I recommend this article: VWO’s guide.

How to A/B Test?

Prerequisites

-

Research: Prepare the experiment with data from the application you’re going to test, traffic wise on how many visitors for specific area of a test and the various conversion goals of different pages. Google Analytics, Mixpanel, etc., are very helpful tools to gather these data.

-

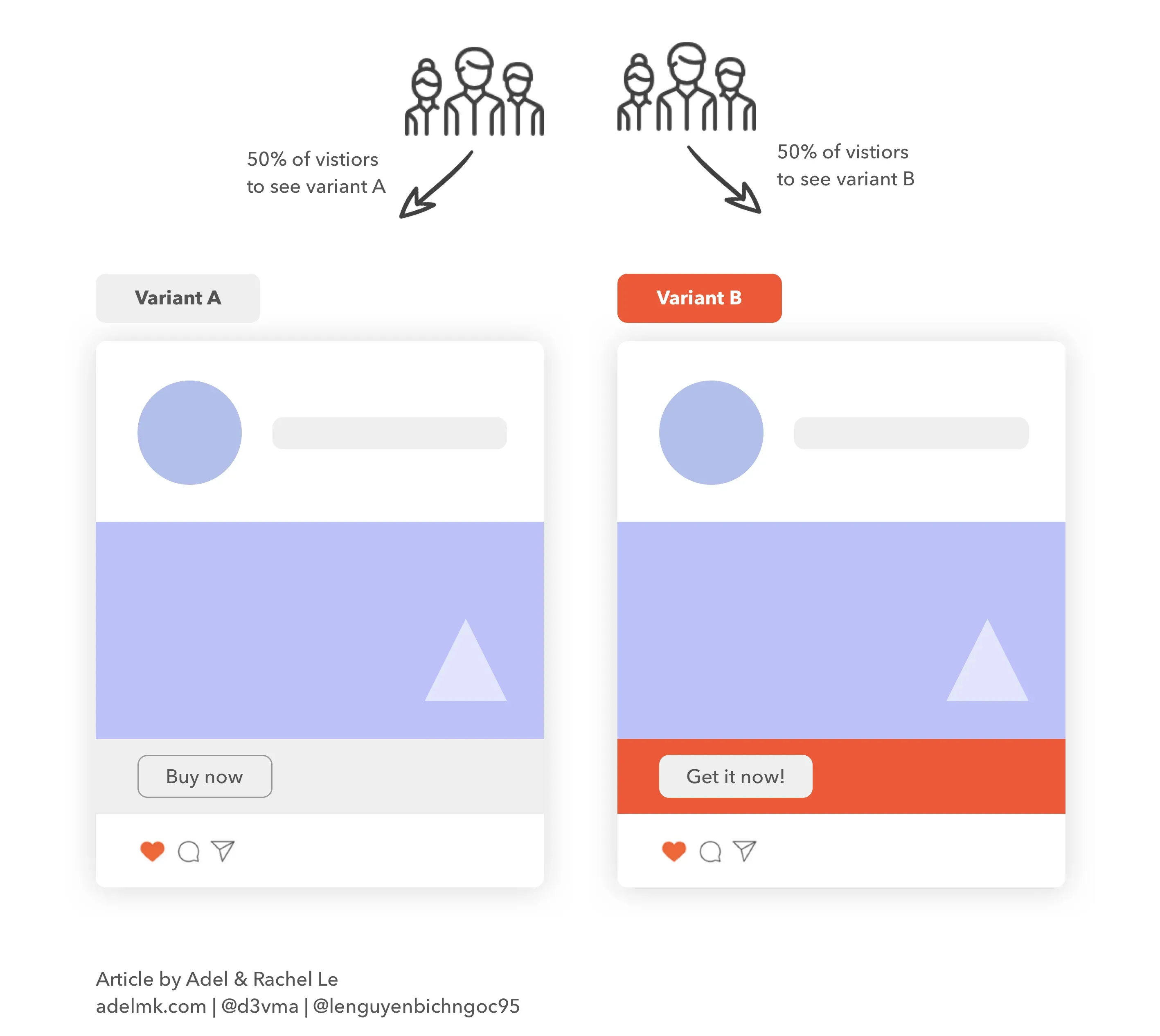

Define Variants: Look at the image above (1st of A/b testing types) as we have two variants, divided equally among all users — let’s say you have a daily 100 users to your website:

- 50% of the users (50 user) to see variant A where the CTA is gray.

- 50% of users to see variant B where the CTA is more standing out with an orange background.

-

Understand objectives & develop a hypothesis: When changes are introduced to users, consequently they would lead to changes in business metrics. As such, establishing a defined objective of the test with stakeholders would be much appreciated and eventually act as north star to guide us in hypothesis development and analysis.

“If I had only one hour to save the world, I would spend fifty-five minutes defining the problem, and only five minutes finding the solution.” — Albert Einstein. -

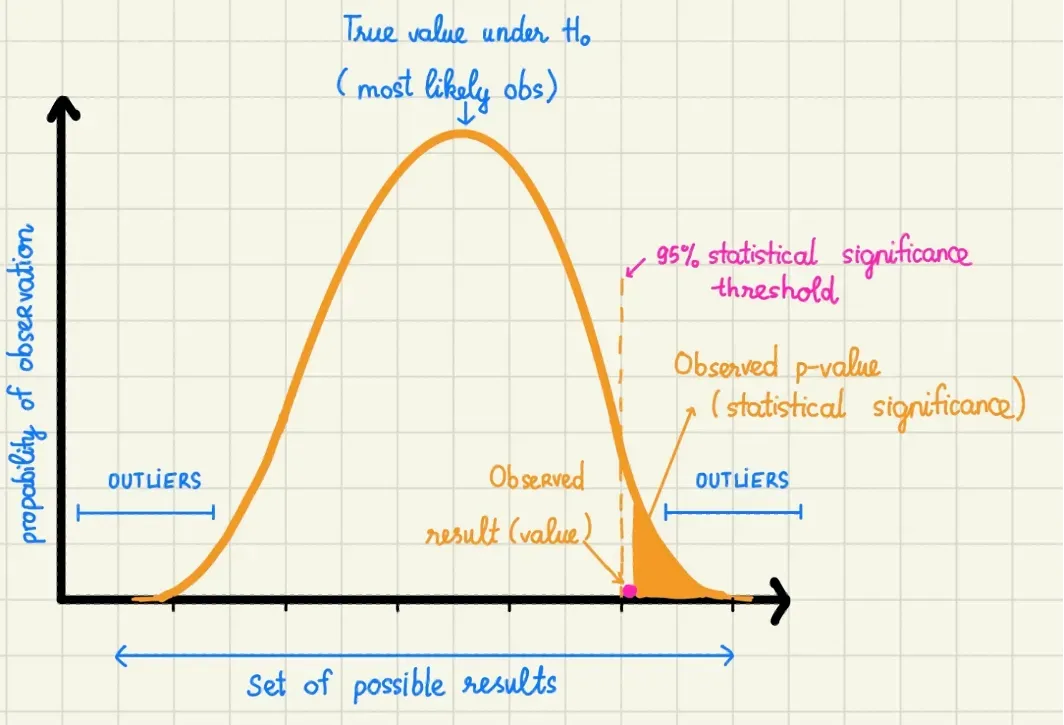

Determine test power, significance level & distribution of variant: Next, an essential step in experiment design is to determine practical significance boundary, AKA test power. Essentially, test power helps to unlock an assumption of how much change in business metric would matter to the organization. For example, if we believe an uplift of $5 in revenue per user on average is our test power, when we achieve that we can roll out the winner variant to production. Another important statistic measurement is the significance level (alpha) and p-value. It helps us to determine whether we would reject and accept the null hypothesis.

Running A/B Tests

- Experiment’s scope: Large websites, with millions of users and data will need some limitations of the experiment’s scope, it’s preferable to run experiments through specific categories.

For example, a fashion web application might need to conduct the experiment to only Women’s clothes category for better & clear outcome and observation, now it’s easier for analysts to determine user’s behavior. - Experiment’s period: Obviously, experiments don’t have to be run forever, instead, it has to be time-framed which shouldn’t be very long, few weeks is an ideal period of time to receive a good outcome.

If you are a software engineer in charge of A/B test, be sure to schedule A/B accordingly as per defined in Prerequisites and actively check and inform relevant stakeholders once the test ends.

Post A/B Test

-

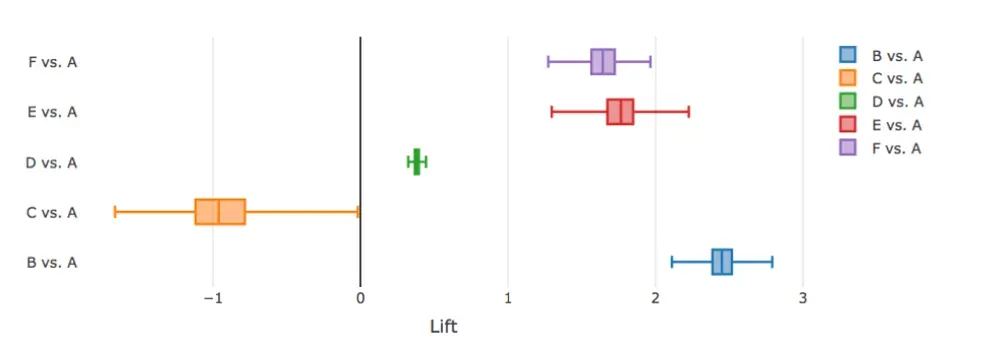

Observe and analyze: As a last step, which is a very important one is to find your winner variant, and make analysis of your results. Apart all the evaluation on test power/p-value, my favorite visualization is using box plot when it comes to evaluating multiple variants at once. For example, the below plot is telling me that B (the dark blue box) has the greatest uplift compared to A and that I would not have to select between E and F (the red and purple ones) which have very similar performance in this case.

But in some circumstances that I would have to, it is likely and suggestive that the test time frame should be expanded a bit longer to expose E and F to more visitors.

But in some circumstances that I would have to, it is likely and suggestive that the test time frame should be expanded a bit longer to expose E and F to more visitors. -

Business decisions & feature rollout: After concluding the winner, it’s time to deploy the winning variant. Don’t forget to document all the results and data gathered for future use, this is extremely important for understanding your audience step by step with each experiment.

What to Avoid?

I’ve personally seen lots of businesses losing money and effort as a cost of making critical A/B testing mistakes.

Testing is a smart way to improve conversions and increase revenues if it’s running in the proper way and being correctly understood. In this section I will list down the most common mistakes I’ve seen that can be avoided for a successful A/B testing journey.

- Testing too many changes at once: As it seems tempting to test many changes at once, but too many changes will make it difficult for pointing-out which changes influenced a variant’s success or failure.

- Relying on others’ data: Tests on a other’s application/websites shouldn’t work for you the same! Hypothesis are different in each case and if you have an invalid hypothesis, your test will not make the success you want. Do a research on your scope, users and experiences and based on the data, you get your own hypothesis, run tests and get a clearer image.

- Declaring a winner too soon: It might be exciting at the beginning to observe a winner that leads to increase conversions and sales to your product, but you shouldn’t rush to end the experiment.

Tests need to run for a proper amount of time with enough users and interactions to reach the significant statistics of results. - Using unbalanced traffic: To have a clear outcome of a certain test, tests should run with an appropriate traffic to have a significant results. If businesses end up using higher or lower traffic, it will increase the chances of the tests to fail gaining the correct results.

- Ignoring external factors: External factors like big sales days or an unexpected traffic might affect your tests results. Conclusions might be insignificant and comparison will be unfair as in a day might have way higher traffic than the day before. That will drive to confusion and unhealthy experiment.

Technical Highlights for A/B Testing

As a Software Engineer, it’s your responsibility to conduct A/B tests technically and deploy to production environment, therefore, you should also consider the following:

- Overcome any technical difficulties or performance issues: You need to make sure you’re not adding too much external resources that might affect the page/screen loading time, which subsequently, will drive to insignificant results and failed tests.

- Include tracking data for a comprehensive analysis: You should have a general tracking data and descriptive labels for actions to measure that in your report by the end of the experiment.

- Set up general configurations for future tests: If you’re willing to run tests quite often, you can consider having general configs for upcoming tests in order to avoid any redundancy and to have more effective development process.

- Clean up unused code after the test ends: Once an experiment end, you should cleanup any unused code in your codebase. Just like the toilet papers, but using rm -rf to get rid of any legacies.

Big applause to Rachel LE for her contribution to this article! Don’t forget to show your love ❤️ by clapping 👏 for this article and sharing your views 💬 in the comments.